Posted: Apr 10, 2011 8:36 am

(Not yet) understanding complex systems

A complex system is composed of a large set of interactors called agents, whose individual behaviors are not necessarily complex in themselves, but who will interact repeatedly enough for the behavior of the whole system to be hard to predict. Complex systems abound, and here are some examples of them (in brackets, the agents of the system) :

1. Emergence in the Game of Life

Conway's Game of Life is a special case of a cellular automaton, specified by a set of rules operating on configurations of the cells of a 2-dimensional grid. Every cell is in one of two states, Off or On, or more vividly, dead or alive. The rules specifying what happens to an individual cell are as follows :

As the reader can check, all living cells have three neighbours, and no dead cell has more than two neighbours,

which means that nothing happens and the configuration stays forever. This is our first, trivial emergent pattern, that will be named "block".

That one is already a little more fun. At each turn two cells die and two other cells are born, which produces an alternating pattern. Although it is not as stable as our first pattern, I hope that you have no difficulty in naming this pattern "blinker".

Here is where the fun really begins : this pattern looks like it takes four steps to reappear unaffected, except that it has "moved down". But what has moved down ? Certainly not any cell, they have all stayed in their place, some coming to live and some others dying. Nevertheless, I will be playing the "emergence" card in order to christen that pattern "glider", wherever it appears on the grid.

As you can imagine, some people have taken the game further :

Is this just bullshit ? Consider now that by using gliders, eaters, guns, and many other sophisticated patterns, Conway and others have managed to build a gigantic configuration that acts like an universal computer [Chapman 2002]. That is a pretty impressive achievement, that would have been impossible without accepting that, for instance, "gliders" really exist !

2. Features

The bad news are, complex systems do not seem to share a lot of common features. Here are two of them, the first one pretty basic and the second one more sophisticated.

Oscillations

A typical feature of a complex system is an sustained oscillation that is endogeneous, i.e. not explained by external inputs.The Lotka-Volterra predator-prey model is the prime example where the origin of such oscillations is very well understood. When predators are numerous, preys become rare, predators starve, after some time they die off and preys can flourish again in order to fuel predators... and the cycle of life makes another merry-go-round. By analogy, when struggling to explain the same oscillations in, say, the global economy, it makes sense to look for such feedback loops.

Power laws

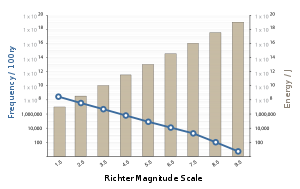

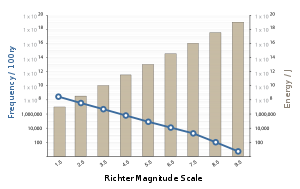

Look at the above picture. The blue line shows how many earthquakes of each magnitude have happened in the last century. Note that both axis are logarithmic since the Richter index is a logarithmic index (an earthquake of magnitude 6 is 10 times stronger than one of magnitude 5, 100 times than one of magnitude 4, etc.). On those logarithmic axis, you see a rather nice straight line (indeed, maybe just a bit too nice to be completely true).

That would already qualify as a curiosity if it concerned only earthquakes. But replace now earthquakes with people, and magnitude with fortune. You also get about a straight line. Or take English words ranked by their occurrences in written text [Zipf 1935]. Or market days, ranked by variations of the price of cotton [Mandelbrot 1997]. Straight lines again. A distribution like the above is said to follow a power law, and power laws are pretty ubiquitous in complex systems. In fact, they are sometimes said to be a signature of complex systems.

Technical stuff for statisticians, might you think. But in fact there is an essential qualitative difference between a distribution following a power law or the classical Gauss bell curve. In the latter case, exceptional values can be discarded because they are rare enough to affect the mean only infinitesimally. Not so with the power law. As exceptional as your consider values, their relative weight will always be on par with the less exceptional values. That means that the law of large numbers won't hold in a power law distribution.

3. Tools

How are we to tame complex systems ? The obvious empiricist answer would be that since we can't predict the exact outcome of a complex system, we should approach it statistically, by computing means, correlations, variances. But we have just seen why it won't work that way. In the case of complex systems following power laws, what we use to call the statistical noise just doesn't average out. Except in some gentle cases, doing bell-curve statistics might do more harm than good. [Taleb 2007]

Since the mathematics seem to be of little help, what we are left with is computer simulation. The obvious approach is to model the behavior and the interaction of agents and to let the system unfold. That is what is done most of the time, but the problem is that the behavior and interactions of the agents can always be modeled in a lot of different ways, that yield qualitatively very different results.

In contrast, the genetic algorithm [Holland 1975] is a very efficient tool when we don't know everything about the structure of our system (and we usually don't), but we know or suspect that it has been under a certain selection pressure. It mimics evolution by making successful agents interbreed by crossing-over of their "genetic" code. Although it seems at first glance to produce a lot of overhead, the genetic algorithm has proved surprisingly efficient in finding good (but not optimal) solutions to hard problems [Mitchell 2009].

4. Biology

Talking about a complex systems approach in biology sounds a bit like a tautology. As the reader will have noticed, the complex systems approach intends to build over the tremendous explanatory power that the theory of evolution has brought to what might be the most complex of all of nature's phenomena, life.

So what can complex systems give back to biology ? In a widely cited work, complexity guru Stuart Kauffman has argued that evolution is not playing in solo, and that non-evolutive self-organizing processes had an essential role to play in the genesis, as well as in the flourishing of life on Earth [Kauffman 1993]. But while the significance of Kauffman's findings remains quite controversial, there is one fact in the theory of evolution that has come as a relative surprise at the time, and that the theory of complex systems would have clearly predicted had it already been laid down.

It is Gould and Eldredge's observation of punctuated equilibrium [Eldredge 1972]. What they discovered in the fossil record was that the rate of evolution was not more or less constant modulo the statistical noise, as had been conjectured a little lazily. In contrast, much of the biological diversity has occurred in sudden boosts, the most famous of them being the Cambrian explosion. But that is exactly what one would have expected from the assumption that the speciation events followed a power law.

5. Earth Science

I have already mentioned the power law found in the distribution of sismic events across magnitudes. The fact that the same distribution has been found in simulations of avalanches in a pile of sand suggests a common mechanism of accumulation, rather than a simple coincidence. [Bak 1996]

But the full-blown complex system approach began with Lovelock and Margulis' Gaia Hypothesis [Lovelock 1974]. Immensely controversial at first, that hypothesis took some adjustements and a simple rebrand under the more politically correct "Earth System Science" in order to get recognized as a promising avenue for research.

The idea is that in normal times the global conditions of the Earth system (temperatures, chemical compositions) vary much less than they would vary in the absence of life. That is due to various homeostatic (regulating) feedback loops between the ecosystem and its environment. In addition to some specific feedback loops having been showcased, more and more realistic computer models have been designed to show how such welcome feedback loops can appear with organisms subject to natural selection, even though none of the individual organisms directly increases its fitness by its regulating effect on its environment. [Downing 2004]

6. Economics

Economics seems to be badly in need from a complex system approach. This is because economics has a problem. For the sake of solving the maths involved, its mainstream theories have traditionally worked under a bunch of less than realistic assumptions. The rationale behind it was that working with a decent approximation of reality should yield to a decent approximation of how the economy works. But chaos theory strongly disagrees with that last assumption, as is known since quite some time, thanks for example to the Second Best Theorem [Lipsey 1956] and the famous butterfly effect [Lorenz 1963].

So what do complex systems have to offer ? I have already mentioned some quite important power laws (about stock markets and the distribution of wealth), and following is the outcome of a nice simulation producing endogeneous oscillations in the stock market [Farmer 2004].

The agents of that model are technical traders. A technical trader is someone who doesn't care about the news (external outputs) and who concentrates on finding and exploiting patterns of information they find in the market. According to the classical theories, the exploit of such patterns is needed in order to correct the errors of the market and to put it back in equilibrium. In that view, Farmer's simulation first ran exactly as expected, but after the market's initial inefficiencies more or less leveled out, wild oscillations began to appear, apparently due to each technical traders trying to exploit the information signal created by the others. Any resemblance with actual persons or facts is, of course, purely coincidental...

7. Psychology and artificial intelligence

The brain is a staggeringly complex system, and it would be quite fatuous to claim for spectacular successes for complex systems in that field yet. Nevertheless, the computer modeling of the mind, aka artificial intelligence, has long been the typical approach of cognitive psychology, like in Marvin Minsky's "Society of the Mind" model, a typical complex system of relatively dumb agents exhibiting complex emergent behaviors. [Minsky 1987]

But the techniques of complex systems have touched the modeling of behaviorist artificial intelligence as well. That is a surprising twist since the behaviorist black-box approach is in many ways the converse of the complex systems approach.

The idea of a behaviorist AI is that we shouldn't make any assumption of how the brain is made, but should design an AI system that learns by reinforcement, as the real brain is known to learn. Funnily, while a head-on approach of behaviorist AI seems to lead to intractable performance problems [Tsotsos 1995], a much more efficient solution to behaviorist AI is given by... neural networks evolving through the genetic algorithm ! [Miikkulainen 2007]

On a more speculative note, consciousness is a prime candidate for an emergent phenomenon, since it seems to consist in integrating a lot of low-level processes into an experience that feels like one single impression. An elegant theory by the late Francis Crick (yes, the DNA guy) and Christoph Koch, backed by at least some neurological data, suggests that consciousness could be an emergent pattern of coalescing brain waves (i.e. patterns of synchronized neuron firing). [Crick 2003]

8. Fundamental Physics

Complex systems as the key to fundamental physics ? Have I lost my mind ? For sure, the following section is beginning to touch the pure science-fiction. If it exceeds your tolerance to speculation, then consider it as a pretty thought experiment.

The complex systems approach has permeated physics through the study of phase transitions and of thermodynamically open systems, but here I will be going with a much more ambitious idea called "Pancomputationalism" or "Digital Physics".

Digital Physics works under the assumption that, although we experience it as continuous, the world is in fact digital at its finest scale, usually conjectured as being the Planck scale, 20 orders of magnitude below the world of fundamental particles. That means that the world resembles - or is, if you are a theist - a simulation by a gigantic super-parallel computer, with some abstract computation taking place simultaneously at every place of the Planck-scale world. In that model, all the things we know of the world, including space, time, and the particles of quantum physics, would be emergent patterns of that ur-computation.

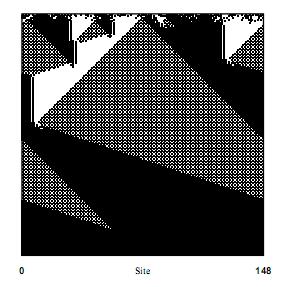

My competence at discussing the foundations of physics will rapidly hit the wall if I go further in the details of Digital Physics. But what I really want to show here is how a very nice computer simulation is able to evolve emergent particles (kind of).[Das 1994]

The picture above represent the run of a particular one-dimensional cellular automaton (see section 1). The horizontal lines represent the state of the automaton, and the vertical axis represents time running from top to bottom.

That cellular automaton has been evolved through the genetic algorithm in order to solve a problem called the "majority rule". Out of an random original configuration of white and black cells (at the top), it produces a string of black cells at the bottom because the original configuration had more black cells than white ones. The automaton is effectively performing a large-scale vote only by interactions between direct neighbors. If you think that it was an easy problem, well, I can tell you it wasn't.

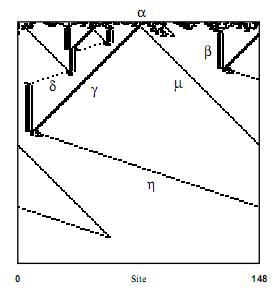

But the most interesting thing is how the automaton does it. Here is again the same picture, but with the emphasis on the frontiers between the homogeneous zones. With some stretch of imagination, the diagram above could be interpreted as the space-time diagram of real physical particles moving and colliding in a one-dimensional space.

Of course, nobody is even remotely suggesting that the example above reflects what real-life particles are made of. But if we push the analogy, we could at least imagine that real-life particles could also be emergent phenomena of an evolved digital physics model computing at a finer scale. And conveniently, physicist Lee Smolin has already proposed a theory of the universe as having evolved under a selection pressure (namely, for maximization of the number of black holes). [Smolin 1999]

9. The future

The main question with the complex systems approach is, will it work ? Will it go beyond a mere description of coincidences ?

Complex systems theory is not the first crazy about systems that the 20th Century has enjoyed. Wiener's cybernetics, control theory and general systems theory have already tried to consider the complex systems as objects of cross-disciplinary study. While those approaches have had some applications in engineering or management, not much of what we would call real scientific knowledge has been thus obtained.

So why would we think that the 21st century would be different ? The obvious answer is Moore's law and the tremendous boost in computing power that we are experiencing. Recall that by their structure where a myriad of agents are making the decisions in the same time, complex systems are very demanding computation-wise, even more so for the serial von-Neumann architecture that modern computers are only slowly beginning to depart from.

Of course, running the simulation is one thing, and getting a theory out of the results is a very different one. Though being put to very good use by today's scientists, the advent of our fantastic computing power is quite a new actor in science, and I doubt that we already have every epistemological tool for extracting all we can from those bunches of numbers.

So the future of complex systems seems very uncertain from now. Which shouldn't surprise us since the scientific world is itself a very complex system, so that any projection of what the researchers in complex systems will really achieve is bound to be doubtful at best.

On the other hand, given the ubiquity and the importance of complex systems for humanity, even modest successes could lead to applications that go well beyond the wildest imaginations. And remember the power laws : the potential scope and impact of the forthcoming discoveries could be huge enough for our wild speculations to deserve consideration, even if our assessment of their probability is not that high.

Bibliography

[Bak 1996] Bak, P., How Nature Works, Copernicus, 1996

[Chapman 2002] Life's Universal Computer, http://www.igblan.free-online.co.uk/igblan/ca/

[Crick 2003] Crick, F., Koch, C., A Framework for Consciousness, Nature Neuroscience, 2003

[Das 1994], Das, R., Mitchell, M., Crutchfield, J.P., A Genetic Algorithm Discovers Particle-based Computation in Cellular Automata, 1994, in Davidor & al, Parallel Problem Solving from Nature, Springer, 1994

[Dennett 2003] Dennett, D., Freedom Evolves, Penguin Books, 2003

[Downing 2004] Downing, K., Gaia in the Machine, in S.H. Schneider et al., Scientists Debate Gaia, The MIT Press, 2004

[Eldredge 1972] Eldredge, N., Gould, S.J., Punctuated Equilibria: an Alternative to Phyletic Gradualism, in T.J.M. Schopf, ed., Models in Paleobiology, Freeman Cooper, 1972.

[Farmer 2004] Farmer, D., Gillemot, L., Lillo, F., Szabolcs, M. Sen, A., What Really Causes Large Price Changes ?, Quantitative Finance, 2004

[Kauffman 1993] Kauffman, S., The Origins of Order, Oxford University Press, 1993

[Lipsey 1956] Lipsey, R.G., Lancaster,K., The General Theory of Second Best, The Review of Economic Studies, 1956-1957

[Lorenz 1963] Lorenz, E.N., Deterministic Nonperiodic Flow, Journal of the Atmospheric Sciences, 1963

[Lovelock 1974] Lovelock, J.E., Margulis, L., Atmospheric Homeostasis by and for the Biosphere - The Gaia Hypothesis, Tellus, 1974

[Mandelbrot 1997] Mandelbrot, B., Fractals and Scaling in Finance, Springer, 1997

[Miikkulainen 2007] Miikkulainen, R., Evolving Neural Networks, Proceedings of the GECCO conference 2007

[Minsky 1987] Minsky, M., The Society of Mind, Simon and Schuster, 1987

[Mitchell 2009] Mitchell, M., Complexity: a Guided Tour, Oxford University Press, 2009

[Smolin 1999] Smolin, L., The Life of the Cosmos, Oxford University Press, 1999

[Taleb 2007] Taleb, N.N., The Black Swan, Penguin, 2007

[Tsotsos 1995] Tsotsos, J.K. : Behaviorist Intelligence and the Scaling Problem, Artificial Intelligence 1995

[Zipf 1935] Zipf, G.K., The Psychobiology of Language. Houghton-Mifflin, 1935

A complex system is composed of a large set of interactors called agents, whose individual behaviors are not necessarily complex in themselves, but who will interact repeatedly enough for the behavior of the whole system to be hard to predict. Complex systems abound, and here are some examples of them (in brackets, the agents of the system) :

- A cell's metabolism (biochemicals)

- The immune system (immune cells)

- The brain (neurons)

- An ant colony (ants)

- An ecosystem (living beings)

- A language (speakers)

- The global economy (people)

1. Emergence in the Game of Life

Conway's Game of Life is a special case of a cellular automaton, specified by a set of rules operating on configurations of the cells of a 2-dimensional grid. Every cell is in one of two states, Off or On, or more vividly, dead or alive. The rules specifying what happens to an individual cell are as follows :

- If an dead (empty) cell has exactly three living neighbors (diagonal neighbors included), it becomes alive (a birth occurs).

- If a living cell has strictly less than two living neighbors, it dies (death by isolation).

- If a living cell has strictly more than three living neighbors, it also dies (death by congestion).

- Otherwise, the cell stays in the same state it was before.

As the reader can check, all living cells have three neighbours, and no dead cell has more than two neighbours,

which means that nothing happens and the configuration stays forever. This is our first, trivial emergent pattern, that will be named "block".

That one is already a little more fun. At each turn two cells die and two other cells are born, which produces an alternating pattern. Although it is not as stable as our first pattern, I hope that you have no difficulty in naming this pattern "blinker".

Here is where the fun really begins : this pattern looks like it takes four steps to reappear unaffected, except that it has "moved down". But what has moved down ? Certainly not any cell, they have all stayed in their place, some coming to live and some others dying. Nevertheless, I will be playing the "emergence" card in order to christen that pattern "glider", wherever it appears on the grid.

As you can imagine, some people have taken the game further :

An eater can eat a glider in four generations. Whatever is being consumed, the basic process is the same. A bridge forms between the eater and its prey. In the next generation, the bridge region dies from overpopulation, taking a bit out of both eater and prey. The eater then repairs itself. The glider usually cannot. If the remainder of the prey dies out as with the glider, the prey is consumed. [Poundstone 1985, quoted in Dennett 2003]

Is this just bullshit ? Consider now that by using gliders, eaters, guns, and many other sophisticated patterns, Conway and others have managed to build a gigantic configuration that acts like an universal computer [Chapman 2002]. That is a pretty impressive achievement, that would have been impossible without accepting that, for instance, "gliders" really exist !

2. Features

The bad news are, complex systems do not seem to share a lot of common features. Here are two of them, the first one pretty basic and the second one more sophisticated.

Oscillations

A typical feature of a complex system is an sustained oscillation that is endogeneous, i.e. not explained by external inputs.The Lotka-Volterra predator-prey model is the prime example where the origin of such oscillations is very well understood. When predators are numerous, preys become rare, predators starve, after some time they die off and preys can flourish again in order to fuel predators... and the cycle of life makes another merry-go-round. By analogy, when struggling to explain the same oscillations in, say, the global economy, it makes sense to look for such feedback loops.

Power laws

Look at the above picture. The blue line shows how many earthquakes of each magnitude have happened in the last century. Note that both axis are logarithmic since the Richter index is a logarithmic index (an earthquake of magnitude 6 is 10 times stronger than one of magnitude 5, 100 times than one of magnitude 4, etc.). On those logarithmic axis, you see a rather nice straight line (indeed, maybe just a bit too nice to be completely true).

That would already qualify as a curiosity if it concerned only earthquakes. But replace now earthquakes with people, and magnitude with fortune. You also get about a straight line. Or take English words ranked by their occurrences in written text [Zipf 1935]. Or market days, ranked by variations of the price of cotton [Mandelbrot 1997]. Straight lines again. A distribution like the above is said to follow a power law, and power laws are pretty ubiquitous in complex systems. In fact, they are sometimes said to be a signature of complex systems.

Technical stuff for statisticians, might you think. But in fact there is an essential qualitative difference between a distribution following a power law or the classical Gauss bell curve. In the latter case, exceptional values can be discarded because they are rare enough to affect the mean only infinitesimally. Not so with the power law. As exceptional as your consider values, their relative weight will always be on par with the less exceptional values. That means that the law of large numbers won't hold in a power law distribution.

3. Tools

How are we to tame complex systems ? The obvious empiricist answer would be that since we can't predict the exact outcome of a complex system, we should approach it statistically, by computing means, correlations, variances. But we have just seen why it won't work that way. In the case of complex systems following power laws, what we use to call the statistical noise just doesn't average out. Except in some gentle cases, doing bell-curve statistics might do more harm than good. [Taleb 2007]

Since the mathematics seem to be of little help, what we are left with is computer simulation. The obvious approach is to model the behavior and the interaction of agents and to let the system unfold. That is what is done most of the time, but the problem is that the behavior and interactions of the agents can always be modeled in a lot of different ways, that yield qualitatively very different results.

In contrast, the genetic algorithm [Holland 1975] is a very efficient tool when we don't know everything about the structure of our system (and we usually don't), but we know or suspect that it has been under a certain selection pressure. It mimics evolution by making successful agents interbreed by crossing-over of their "genetic" code. Although it seems at first glance to produce a lot of overhead, the genetic algorithm has proved surprisingly efficient in finding good (but not optimal) solutions to hard problems [Mitchell 2009].

4. Biology

Talking about a complex systems approach in biology sounds a bit like a tautology. As the reader will have noticed, the complex systems approach intends to build over the tremendous explanatory power that the theory of evolution has brought to what might be the most complex of all of nature's phenomena, life.

So what can complex systems give back to biology ? In a widely cited work, complexity guru Stuart Kauffman has argued that evolution is not playing in solo, and that non-evolutive self-organizing processes had an essential role to play in the genesis, as well as in the flourishing of life on Earth [Kauffman 1993]. But while the significance of Kauffman's findings remains quite controversial, there is one fact in the theory of evolution that has come as a relative surprise at the time, and that the theory of complex systems would have clearly predicted had it already been laid down.

It is Gould and Eldredge's observation of punctuated equilibrium [Eldredge 1972]. What they discovered in the fossil record was that the rate of evolution was not more or less constant modulo the statistical noise, as had been conjectured a little lazily. In contrast, much of the biological diversity has occurred in sudden boosts, the most famous of them being the Cambrian explosion. But that is exactly what one would have expected from the assumption that the speciation events followed a power law.

5. Earth Science

I have already mentioned the power law found in the distribution of sismic events across magnitudes. The fact that the same distribution has been found in simulations of avalanches in a pile of sand suggests a common mechanism of accumulation, rather than a simple coincidence. [Bak 1996]

But the full-blown complex system approach began with Lovelock and Margulis' Gaia Hypothesis [Lovelock 1974]. Immensely controversial at first, that hypothesis took some adjustements and a simple rebrand under the more politically correct "Earth System Science" in order to get recognized as a promising avenue for research.

The idea is that in normal times the global conditions of the Earth system (temperatures, chemical compositions) vary much less than they would vary in the absence of life. That is due to various homeostatic (regulating) feedback loops between the ecosystem and its environment. In addition to some specific feedback loops having been showcased, more and more realistic computer models have been designed to show how such welcome feedback loops can appear with organisms subject to natural selection, even though none of the individual organisms directly increases its fitness by its regulating effect on its environment. [Downing 2004]

6. Economics

Economics seems to be badly in need from a complex system approach. This is because economics has a problem. For the sake of solving the maths involved, its mainstream theories have traditionally worked under a bunch of less than realistic assumptions. The rationale behind it was that working with a decent approximation of reality should yield to a decent approximation of how the economy works. But chaos theory strongly disagrees with that last assumption, as is known since quite some time, thanks for example to the Second Best Theorem [Lipsey 1956] and the famous butterfly effect [Lorenz 1963].

So what do complex systems have to offer ? I have already mentioned some quite important power laws (about stock markets and the distribution of wealth), and following is the outcome of a nice simulation producing endogeneous oscillations in the stock market [Farmer 2004].

The agents of that model are technical traders. A technical trader is someone who doesn't care about the news (external outputs) and who concentrates on finding and exploiting patterns of information they find in the market. According to the classical theories, the exploit of such patterns is needed in order to correct the errors of the market and to put it back in equilibrium. In that view, Farmer's simulation first ran exactly as expected, but after the market's initial inefficiencies more or less leveled out, wild oscillations began to appear, apparently due to each technical traders trying to exploit the information signal created by the others. Any resemblance with actual persons or facts is, of course, purely coincidental...

7. Psychology and artificial intelligence

The brain is a staggeringly complex system, and it would be quite fatuous to claim for spectacular successes for complex systems in that field yet. Nevertheless, the computer modeling of the mind, aka artificial intelligence, has long been the typical approach of cognitive psychology, like in Marvin Minsky's "Society of the Mind" model, a typical complex system of relatively dumb agents exhibiting complex emergent behaviors. [Minsky 1987]

But the techniques of complex systems have touched the modeling of behaviorist artificial intelligence as well. That is a surprising twist since the behaviorist black-box approach is in many ways the converse of the complex systems approach.

The idea of a behaviorist AI is that we shouldn't make any assumption of how the brain is made, but should design an AI system that learns by reinforcement, as the real brain is known to learn. Funnily, while a head-on approach of behaviorist AI seems to lead to intractable performance problems [Tsotsos 1995], a much more efficient solution to behaviorist AI is given by... neural networks evolving through the genetic algorithm ! [Miikkulainen 2007]

On a more speculative note, consciousness is a prime candidate for an emergent phenomenon, since it seems to consist in integrating a lot of low-level processes into an experience that feels like one single impression. An elegant theory by the late Francis Crick (yes, the DNA guy) and Christoph Koch, backed by at least some neurological data, suggests that consciousness could be an emergent pattern of coalescing brain waves (i.e. patterns of synchronized neuron firing). [Crick 2003]

8. Fundamental Physics

Complex systems as the key to fundamental physics ? Have I lost my mind ? For sure, the following section is beginning to touch the pure science-fiction. If it exceeds your tolerance to speculation, then consider it as a pretty thought experiment.

The complex systems approach has permeated physics through the study of phase transitions and of thermodynamically open systems, but here I will be going with a much more ambitious idea called "Pancomputationalism" or "Digital Physics".

Digital Physics works under the assumption that, although we experience it as continuous, the world is in fact digital at its finest scale, usually conjectured as being the Planck scale, 20 orders of magnitude below the world of fundamental particles. That means that the world resembles - or is, if you are a theist - a simulation by a gigantic super-parallel computer, with some abstract computation taking place simultaneously at every place of the Planck-scale world. In that model, all the things we know of the world, including space, time, and the particles of quantum physics, would be emergent patterns of that ur-computation.

My competence at discussing the foundations of physics will rapidly hit the wall if I go further in the details of Digital Physics. But what I really want to show here is how a very nice computer simulation is able to evolve emergent particles (kind of).[Das 1994]

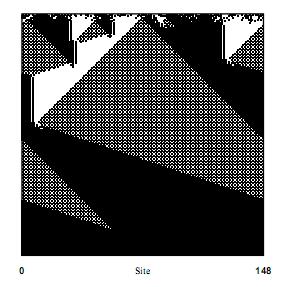

The picture above represent the run of a particular one-dimensional cellular automaton (see section 1). The horizontal lines represent the state of the automaton, and the vertical axis represents time running from top to bottom.

That cellular automaton has been evolved through the genetic algorithm in order to solve a problem called the "majority rule". Out of an random original configuration of white and black cells (at the top), it produces a string of black cells at the bottom because the original configuration had more black cells than white ones. The automaton is effectively performing a large-scale vote only by interactions between direct neighbors. If you think that it was an easy problem, well, I can tell you it wasn't.

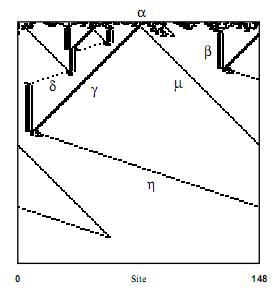

But the most interesting thing is how the automaton does it. Here is again the same picture, but with the emphasis on the frontiers between the homogeneous zones. With some stretch of imagination, the diagram above could be interpreted as the space-time diagram of real physical particles moving and colliding in a one-dimensional space.

Of course, nobody is even remotely suggesting that the example above reflects what real-life particles are made of. But if we push the analogy, we could at least imagine that real-life particles could also be emergent phenomena of an evolved digital physics model computing at a finer scale. And conveniently, physicist Lee Smolin has already proposed a theory of the universe as having evolved under a selection pressure (namely, for maximization of the number of black holes). [Smolin 1999]

9. The future

The main question with the complex systems approach is, will it work ? Will it go beyond a mere description of coincidences ?

Complex systems theory is not the first crazy about systems that the 20th Century has enjoyed. Wiener's cybernetics, control theory and general systems theory have already tried to consider the complex systems as objects of cross-disciplinary study. While those approaches have had some applications in engineering or management, not much of what we would call real scientific knowledge has been thus obtained.

So why would we think that the 21st century would be different ? The obvious answer is Moore's law and the tremendous boost in computing power that we are experiencing. Recall that by their structure where a myriad of agents are making the decisions in the same time, complex systems are very demanding computation-wise, even more so for the serial von-Neumann architecture that modern computers are only slowly beginning to depart from.

Of course, running the simulation is one thing, and getting a theory out of the results is a very different one. Though being put to very good use by today's scientists, the advent of our fantastic computing power is quite a new actor in science, and I doubt that we already have every epistemological tool for extracting all we can from those bunches of numbers.

So the future of complex systems seems very uncertain from now. Which shouldn't surprise us since the scientific world is itself a very complex system, so that any projection of what the researchers in complex systems will really achieve is bound to be doubtful at best.

On the other hand, given the ubiquity and the importance of complex systems for humanity, even modest successes could lead to applications that go well beyond the wildest imaginations. And remember the power laws : the potential scope and impact of the forthcoming discoveries could be huge enough for our wild speculations to deserve consideration, even if our assessment of their probability is not that high.

Bibliography

[Bak 1996] Bak, P., How Nature Works, Copernicus, 1996

[Chapman 2002] Life's Universal Computer, http://www.igblan.free-online.co.uk/igblan/ca/

[Crick 2003] Crick, F., Koch, C., A Framework for Consciousness, Nature Neuroscience, 2003

[Das 1994], Das, R., Mitchell, M., Crutchfield, J.P., A Genetic Algorithm Discovers Particle-based Computation in Cellular Automata, 1994, in Davidor & al, Parallel Problem Solving from Nature, Springer, 1994

[Dennett 2003] Dennett, D., Freedom Evolves, Penguin Books, 2003

[Downing 2004] Downing, K., Gaia in the Machine, in S.H. Schneider et al., Scientists Debate Gaia, The MIT Press, 2004

[Eldredge 1972] Eldredge, N., Gould, S.J., Punctuated Equilibria: an Alternative to Phyletic Gradualism, in T.J.M. Schopf, ed., Models in Paleobiology, Freeman Cooper, 1972.

[Farmer 2004] Farmer, D., Gillemot, L., Lillo, F., Szabolcs, M. Sen, A., What Really Causes Large Price Changes ?, Quantitative Finance, 2004

[Kauffman 1993] Kauffman, S., The Origins of Order, Oxford University Press, 1993

[Lipsey 1956] Lipsey, R.G., Lancaster,K., The General Theory of Second Best, The Review of Economic Studies, 1956-1957

[Lorenz 1963] Lorenz, E.N., Deterministic Nonperiodic Flow, Journal of the Atmospheric Sciences, 1963

[Lovelock 1974] Lovelock, J.E., Margulis, L., Atmospheric Homeostasis by and for the Biosphere - The Gaia Hypothesis, Tellus, 1974

[Mandelbrot 1997] Mandelbrot, B., Fractals and Scaling in Finance, Springer, 1997

[Miikkulainen 2007] Miikkulainen, R., Evolving Neural Networks, Proceedings of the GECCO conference 2007

[Minsky 1987] Minsky, M., The Society of Mind, Simon and Schuster, 1987

[Mitchell 2009] Mitchell, M., Complexity: a Guided Tour, Oxford University Press, 2009

[Smolin 1999] Smolin, L., The Life of the Cosmos, Oxford University Press, 1999

[Taleb 2007] Taleb, N.N., The Black Swan, Penguin, 2007

[Tsotsos 1995] Tsotsos, J.K. : Behaviorist Intelligence and the Scaling Problem, Artificial Intelligence 1995

[Zipf 1935] Zipf, G.K., The Psychobiology of Language. Houghton-Mifflin, 1935